An autonomous wheelchair meets Li Keqiang

17 October 2016

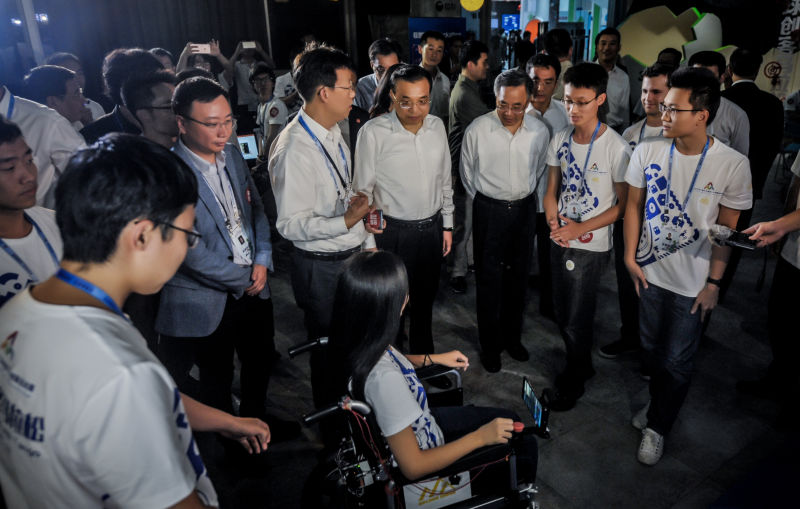

When AVR meets AR, an autonomous wheelchair is born that gets to be introduced to the Chinese Premier of the State Council, Li Keqiang, during the 2016 Mass Innovation and Entrepreneurship week in Shenzhen!

Between the 8th and 12th of October, I traveled to Shenzhen in China in order to participate in a hackathon, organized by Lenovo, which was focused around the use of Google’s revolutionary AR (Augmented Reality) technology, Project Tango, that is being deployed in one of the Chinese tech giant’s latest smartphones, the Phab 2 Pro. The project that my team and I developed during our days there, was an autonomous wheelchair which was also selected to be presented to the Chinese second-in-command, Li Keqiang. But let’s take it from the beginning…

Almost one year ago, following the success of the World’s first Android Autonomous Vehicle, I was contacted by a Google’s Program Manager, responsible for Project Tango, Sarah Cobb, who offered our team two Tango enabled tablets in order to enhance the capabilities of our autonomous vehicle with the amazing AR capabilities that the Google technology had to offer.

Specifically, mobile devices using Project Tango are supplied with hardware and software, exposed by a user friendly API, that enable the device to sense its surroundings and be aware of its own position in the 3 dimensional space. In particular, the functionality of Project Tango can be outlined based on three concepts: a) Motion Tracking, b) Area Learning, c) Depth Perception. A plethora of projects are based on this technology, including one by NASA and many applications (mainly games) have been published.

Some members of the team behind the Android Autonomous Vehicle, saw the great potential in this technology and formed a start-up company with members from Sweden and China, Golden Ridge, that aims to provide future proof robotics for all! Golden Ridge robotics envisions to popularize AR and through it, to change the lives of everyday people, by giving them the chance to reap the benefits of the latest technological advances, at an affordable cost, through the reuse of existing mobile devices.

In the end of September, Golden Ridge CEO and classmate Jiaxin Li, gave me a call and almost frantically talked about an opportunity that could not be missed. Lenovo was organizing a hackathon in Shenzhen and the idea that he submitted was not only approved, but also selected to be presented to the Chinese Premier, Li Keqiang, who was going to visit the exhibition the hackathon was a part of. This of course, provided that we were able to deliver a satisfactory enough prototype by October the 12th.

Jiaxin’s ground breaking idea was an autonomous wheelchair, that uses a Project Tango device to learn an area and navigate through it. Additionally, using computer vision and machine learning, it would interpret its surrounding objects and humans, in order to navigate more safely and effectively. Such a product could drastically improve the quality of lives of many!

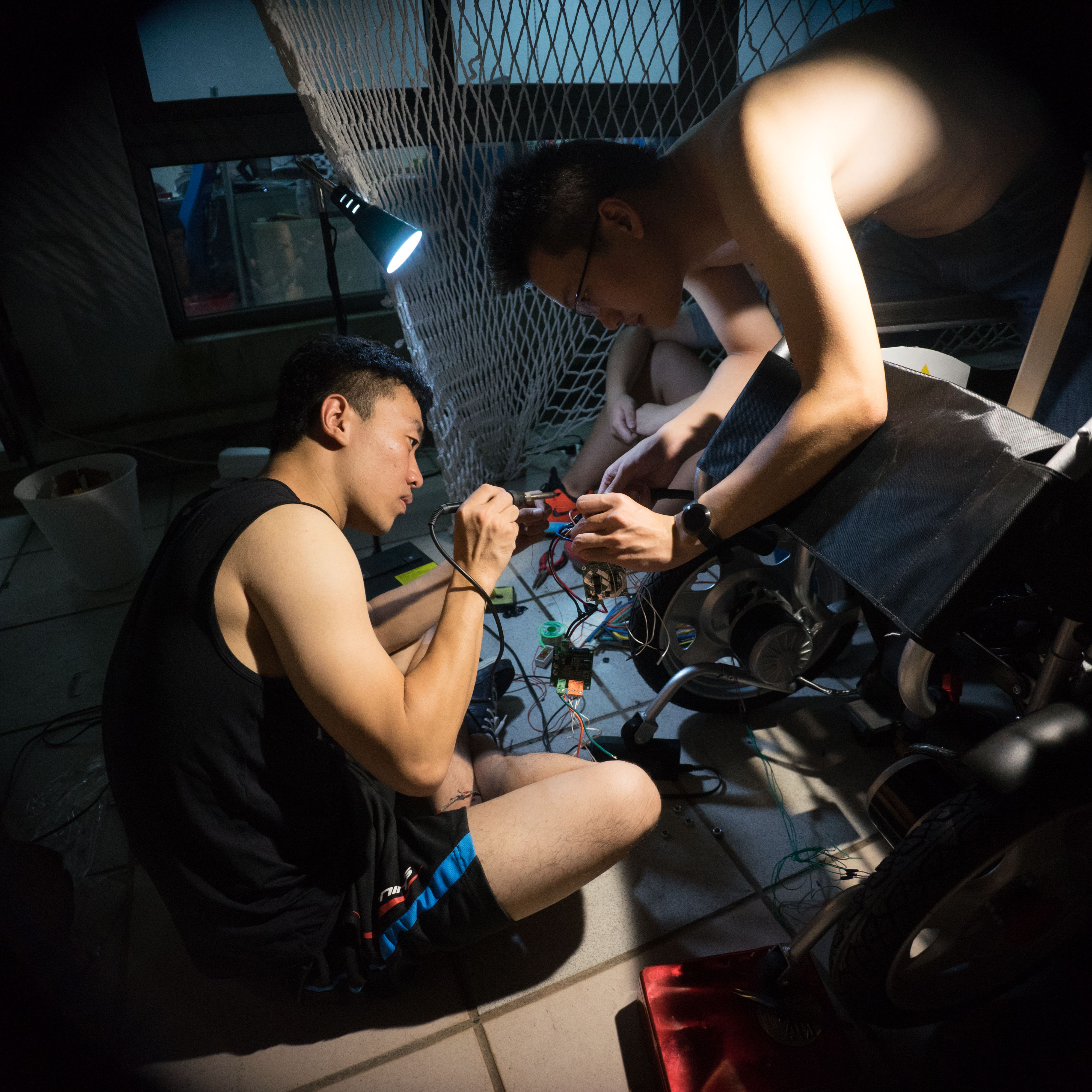

As it is based on a relatively inexpensive mobile device and an Android application, it is by many orders of magnitude cheaper than the competing solutions in the market. Just imagine the possibilities of having a wheelchair, that can be “ordered” to go to different locations inside a house, by series of simple taps on a mobile phone screen! Jiaxin had already gathered a team of very talented students and researcher’s from China (Yin Jiao, Sifan Lu, Dapeng Liu, Yifeng Huang, Dong Dong, Chen Liang, Junfeng Wu, Liu Yang) but also wanted my know-how on the embedded side of things. The chance to create something that could change the world and meet the Chinese Premier, was too good to be missed. I requested a few days off my job and before I knew it, I was getting a temporary 5-day visa to Shenzhen, at the Luohu border crossing between Hong Kong and mainland China!

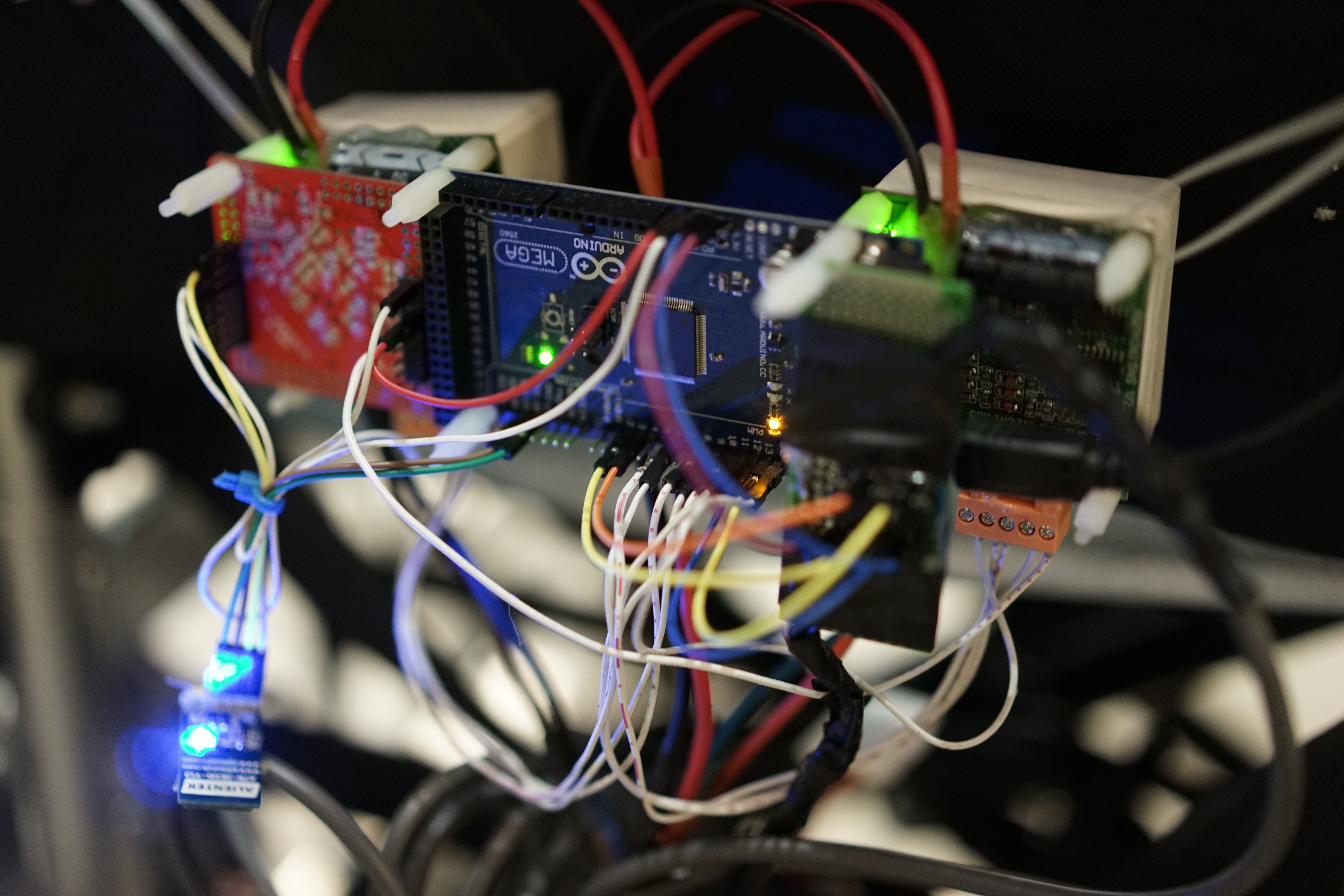

The system that we created, is composed of a Lenovo Phab 2 Pro, which is the brain that controls an Arduino Mega via a BLE connection and in turn drives two custom-made motor controller boards. On the phone, a Unity based Android application was created, that enables the user to scan and “teach” an area to the phone, which is then saved as an “Area Description File”. Next, through the phone’s live camera feed, the various way points that the wheelchair should follow are simply tapped on the screen.

To give an illustrative example, everything begins with the room or the house being scanned, something that is achieved simply by selecting the appropriate function in the application and walking around holding the phone. After this is complete, if the wheelchair should pass through a door, then the phone should be pointed towards the said door and the user merely clicks on it to create a way point. At that moment, the Project Tango software will have already created a model of the area and will know where the selected spot lies, in relation to the model. Finally, it is only a matter of comparing the current location and orientation of the phone with the desired one to derive the speed and angle that the wheelchair should travel. Remember, since the phone will be in an area already scanned, it will auto-magically know its current position, thanks to the great technology by Google and Lenovo!

Once the wheelchair is instructed to start following the way points, the application sends the speed the wheelchair should have, as well as the current deviation (error) from the desired orientation. The wheelchair, which is running the Smartcar library, uses two separate PID controllers to maintain its speed and change its turning angle dynamically depending on the current deviation from the target. The controller that maintains the speed was already implemented in the library, so during the competition we merely developed and calibrated the algorithm that compensates for the angle. You can check out the Arduino code running on the wheelchair here, but please note that it uses the Smartcar library of the hackathon branch which is at the time of writing not merged into master. The code for the Android application is not yet released.

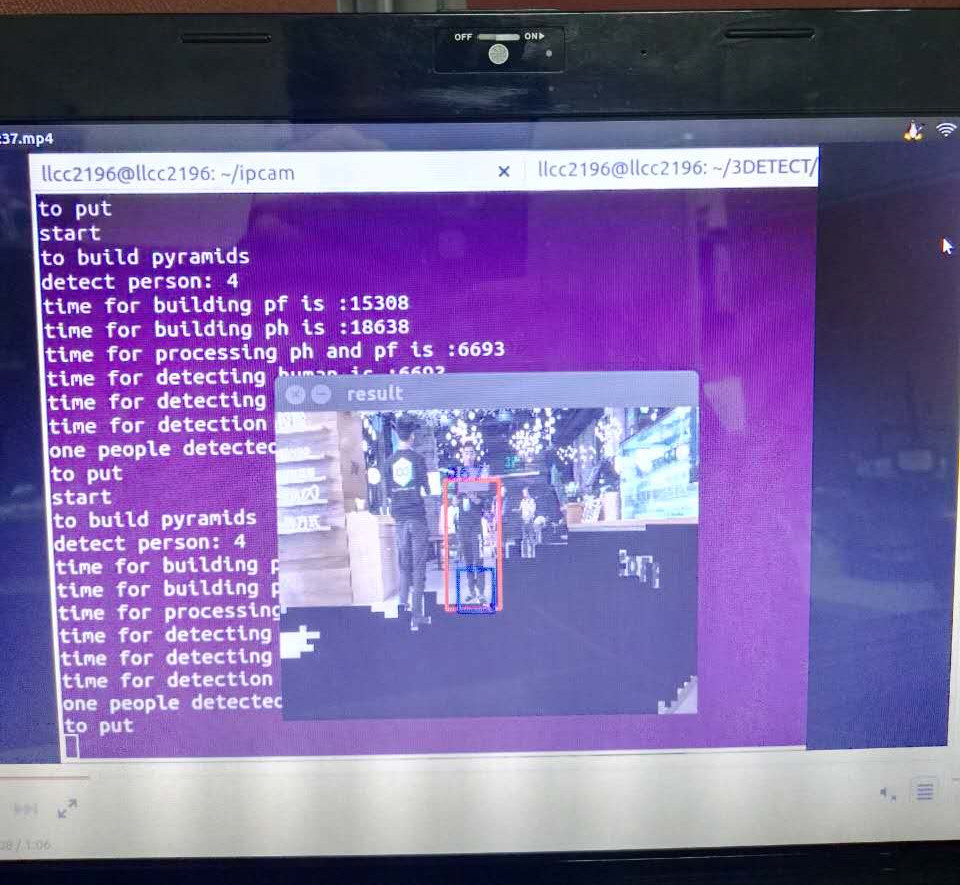

Another cool feature that we prototyped but did not manage to integrate during the hackathon, was real time object identification and recognition, using computer vision and machine learning. This will enable the system to comprehend its surroundings more efficiently and also pave the road for some very interesting use cases, such as having the wheelchair to navigate until certain objects that can have dynamic positions. E.g. instruct the wheelchair to take the user to the nearest glass of water.

During the development, we ran into various problems, from the wheelchair’s method of steering being physically unresponsive to rapid changes to finding the correct way to generate the Area Description File. However after hard efforts and very little sleep, we managed to make everything work, which gave us a tremendous sense of satisfaction! Here is a video of the prototype:

The organizers were very pleased with our work and the green light was given to present the product to Li Keqiang himself. I felt very proud not only because the Premier learned about our work, but also since I got to personally speak to him and shake his hand. :)

CCTV, which is China’s largest and most powerful TV station as well as many other channels and news media reported on the event and our project in particular, while the picture with the Premier talking to us can be found on an article at the official website of the Chinese State Council. Observe after 00:24 in the video below.

Last but definitely not least, the appropriate credits should be given to the rest of the Golden Ridge team who I would like to thank for the efforts and the great hospitality in China: Jiaxin Li, Yin Jiao, Sifan Lu, Dapeng Liu, Yifeng Huang, Dong Dong, Chen Liang, Junfeng Wu, Liu Yang. Shenzhen is a great city and as I did not have enough time to explore it thoroughly, I promised to return again soon!