The world's first Android autonomous vehicle

29 June 2015

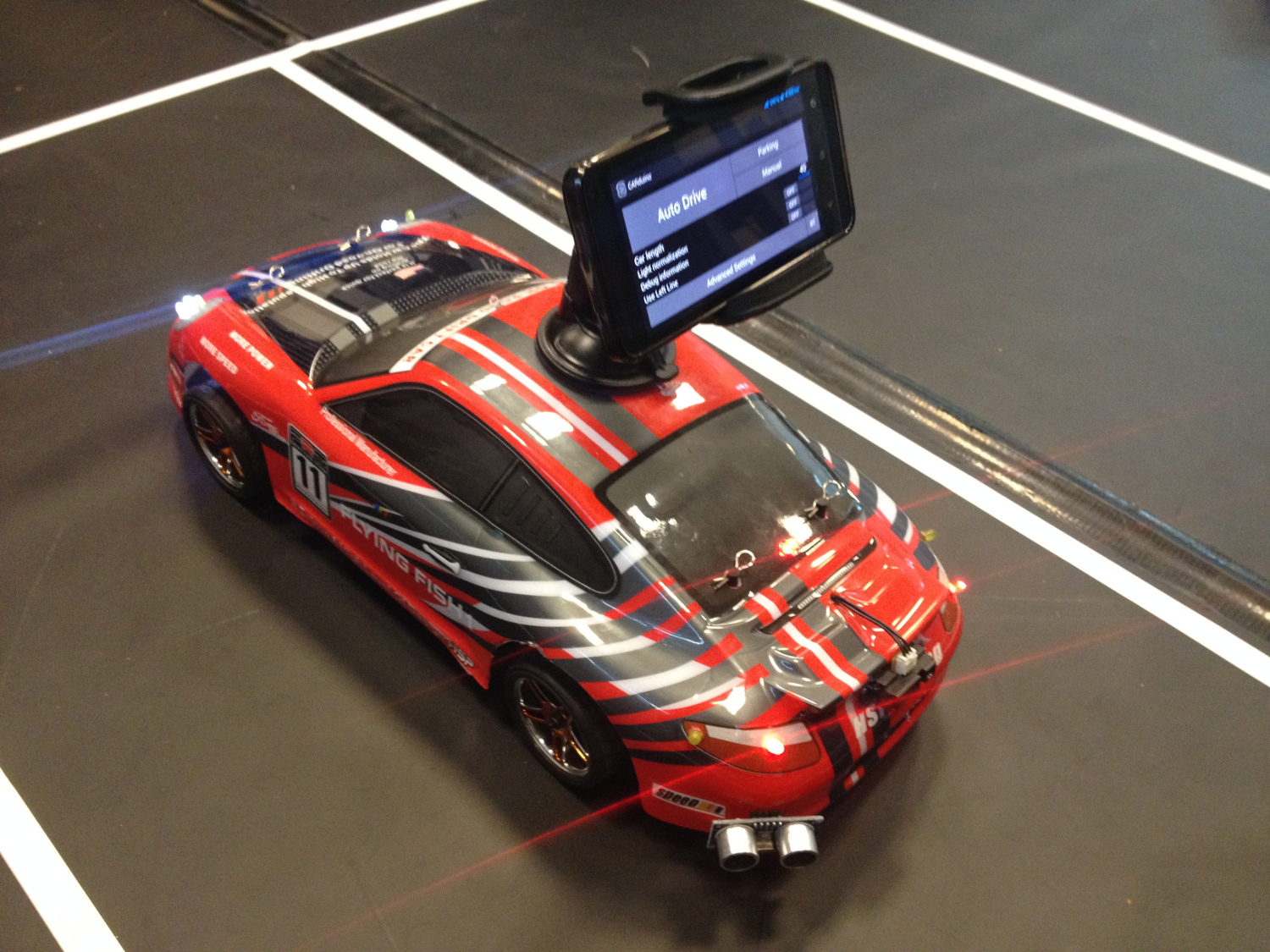

Team Pegasus created an autonomous vehicle that utilizes machine vision algorithms and techniques as well as data from the on-board sensors, in order to follow street lanes, perform parking maneuvers and overtake obstacles blocking its path. The innovational aspect of this project, is first and foremost the use of an Android phone as the unit which realizes the image processing and decision making. It is responsible for wirelessly transmitting instructions to an Arduino, that controls the physical aspects of the vehicle. Secondly, the various hardware components (i.e. sensors, motors etc) are programmatically handled in an object oriented way, using a custom made Arduino library, which enables developers without background in embedded systems to trivially accomplish their tasks, not caring about lower level implementation details.

The use of a common mobile phone, instead of specialized devices (i.e. a Linux single-board computer), offers much higher deployability, user friendliness and scalability. Android-based autonomous vehicles, which could be deployed on the road, were not found in the literature, therefore the team behind it believes this avant garde work, can constitute the basis of further research on the subject of autonomous vehicles controlled by consumer, handheld, mobile devices.

This article will cover details on the development and the implemented features of the first Android autonomous vehicle. It was created by Team Pegasus during the last couple of months, within the context of the DIT168 course, at the University of Gothenburg. The rest of the Team Pegasus who worked on this project and I would like to thank very much were (in alphabetical order): Yilmaz Caglar, Aurélien Hontabat, David Jensen, Simeon Ivanov, Ibtissam Karouach, Jiaxin Li, Petroula Theodoridou.

Related repositories

Driving logic (for OpenDaVinci simulation)

Background story

In the DIT168 course, offered by professor Christian Berger, the students who were divided in groups of 8, were tasked to create self-driving autonomous vehicles, that could follow street lanes, overtake obstacles and perform parking maneuvers. In order to achieve that, they were supplied with an RC car, a single board Linux computer (ODROID-U3), a web camera (to provide machine vision capabilities to the system) as well as a plethora of sensors and microcontrollers. Over the span of approximately three months, they had to first implement the various features in a virtual simulation environment and then deploy and integrate them on the actual vehicle. The OpenDaVinci middleware, was suggested by the course administration as both the testing and the deployment platform.

This was an overview the default course setting, which has been working well over the years the course has been taught. However, “well” was not good enough for us. :-)

The problem

To begin with, we did not like how the previous end products looked. Particularly, all of them were characterized by a tower looking structure where the webcam was mounted. Because of that, a hole had to be curved off the vehicle’s default enclosure. This plastic enclosure merely serves aesthetic purposes but since it makes the vehicle look like… a vehicle, we considered important to maintain its integrity.

Furthermore, the OpenDaVinci platform seemed rather excessive for what we were trying to do. Since OpenDaVinci is designed as a distributed, platform independent solution, that even includes a simulation environment it inevitably is accompanied by a specific degree of complexity, especially when it comes to deployment and use. Do not get me wrong, OpenDaVinci seems to be a software with a lot of potential, however we believed we could make do without it. Last but not least, everyone, all these years has been doing essentially the same thing. Using a Linux single board computer, that is connected to a camera and some kind of microcontroller and sensors, to perform the given tasks. We wanted to be fundamentally different and innovative.

How

The three aforementioned problems, a) the “ugly” appearance, b) the excessive software and c) the lack of originality in the default setup, lead us to embrace Android as the optimum solution. Utilizing an Android mobile device, as the entity that handles the decision making, we first of all managed to combine the functionalities of the single board computer and the webcam into one component, thus saving considerable space and scoring many points on the “beauty” scale. Moreover, using a single dedicated Android application, we circumvent the OpenDaVinci’s dependencies, difficulties to deploy and space requirements. Finally, such an initiative had never been taken up before neither in the context of the specific course nor globally, therefore we found it particularly intriguing to create something that will really stand out in terms of originality, future potential and of course, performance.

We began development on four different fronts. First, the physical layer on the vehicle which included the assembly of the various components, the design of custom parts and all the programming involved. This is where I was mostly involved in and therefore will describe with more details later. Jiaxin Li, also took part in this part by providing input and valuable help during assembly.

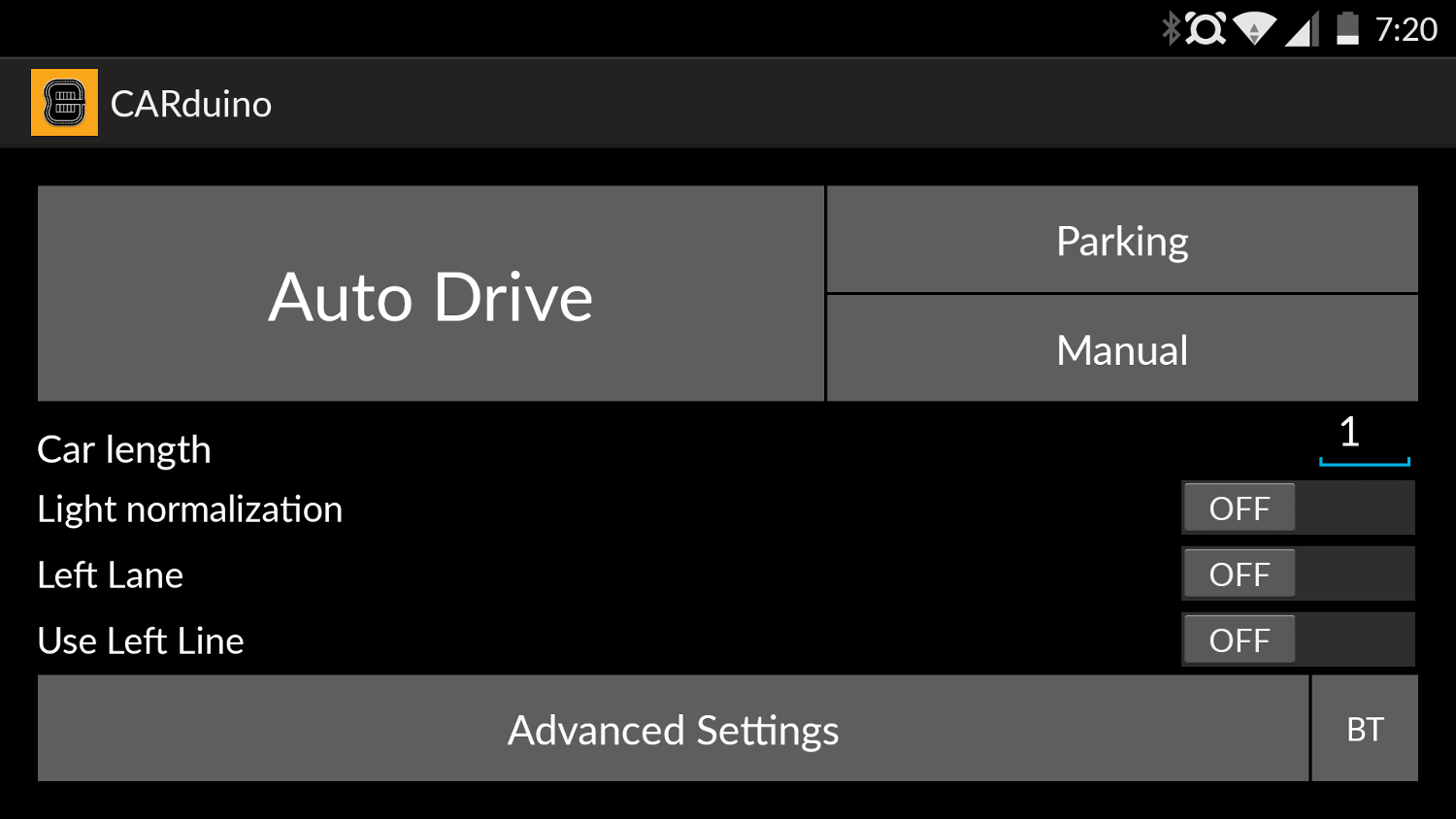

Then, there was the front end, which included an Android application that communicated via Bluetooth with the on-board microcontroller, that drove the motors and parsed data from the sensors. I was initially involved in setting up the communication channel and protocol, however I soon focused my efforts on the physical layer, due to its increased requirements. Petroula Theodoridou is who worked mostly on this and also is to thank for the intuitive graphical interface and its overall functionality.

Furthermore, we wanted to use the simulator included in OpenDaVinci platform so to test algorithms and tactics, before deploying them in the non-deterministic real world environment, where many things can go wrong. The OpenDaVinci environment is written in C++, therefore in order to be able to use the same code on the Android device and the OpenDaVinci simulation, we wrapped the C++ functions with JNI, in order to be able to use it natively through JAVA. The main drawback to this, was the difficulty to debug the C++ side of things. Other than that, migrating code from the simulator to Android, was trivial. Consequently, we had to develop the core driving logic in C++ and create the interface between C++ and JAVA. I was very little involved in these, mostly offering bug fixes and helping my colleagues understand practical limitations when it came to sensor readings and testing on the actual environment. David Jensen contributed in setting up the C++ wrapper and also the lane following, while Aurelien Hontabat worked on the parking. Ibtissam Karouach and Simeon Ivanov, worked on the really challenging task of overtaking. I believe that Jiaxin Li chipped in there too. Last but not least, Yilmaz Caglar tried to enhance our lane following functionality with a PID controller. I will write more on the driving logic features later.

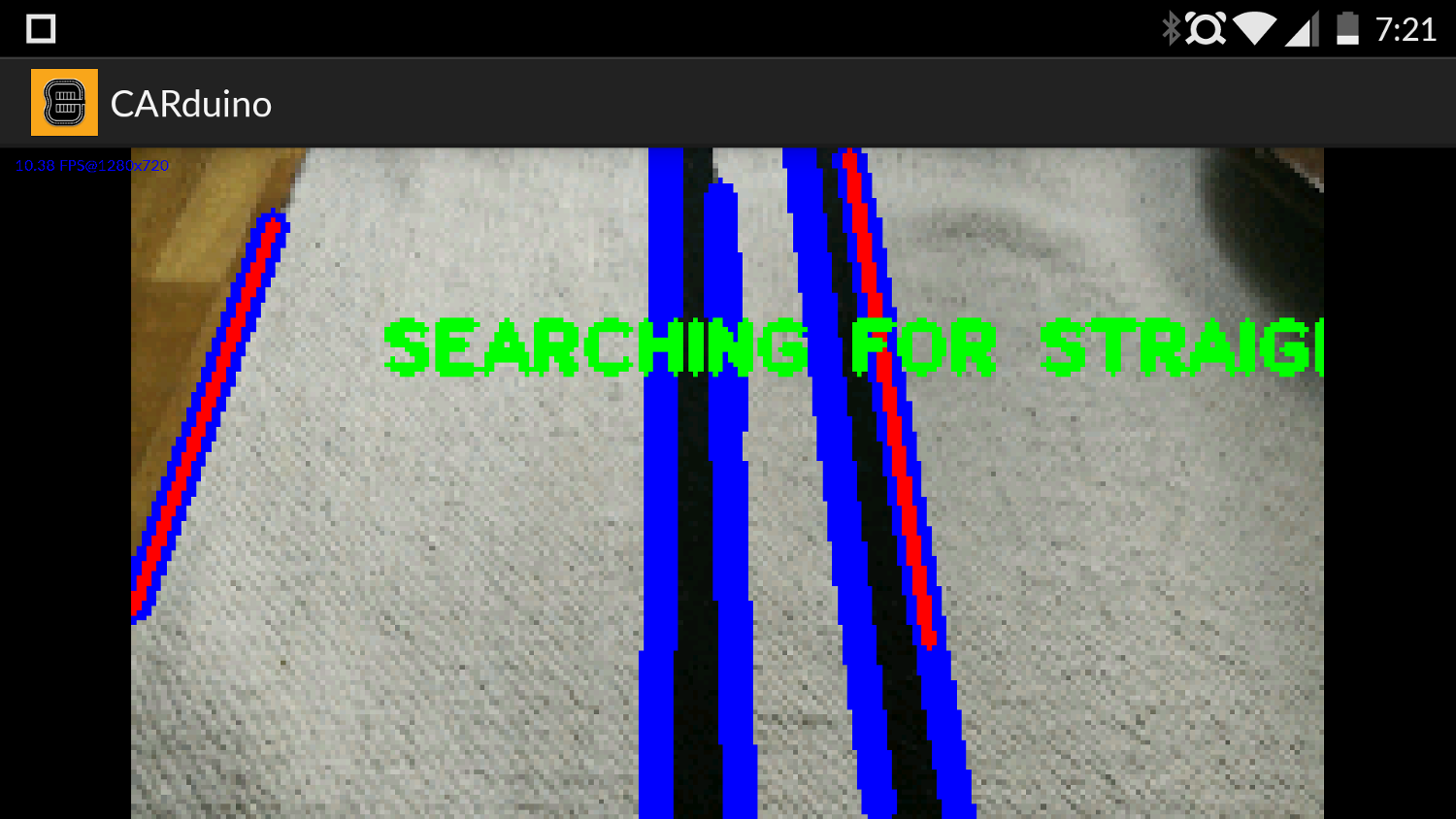

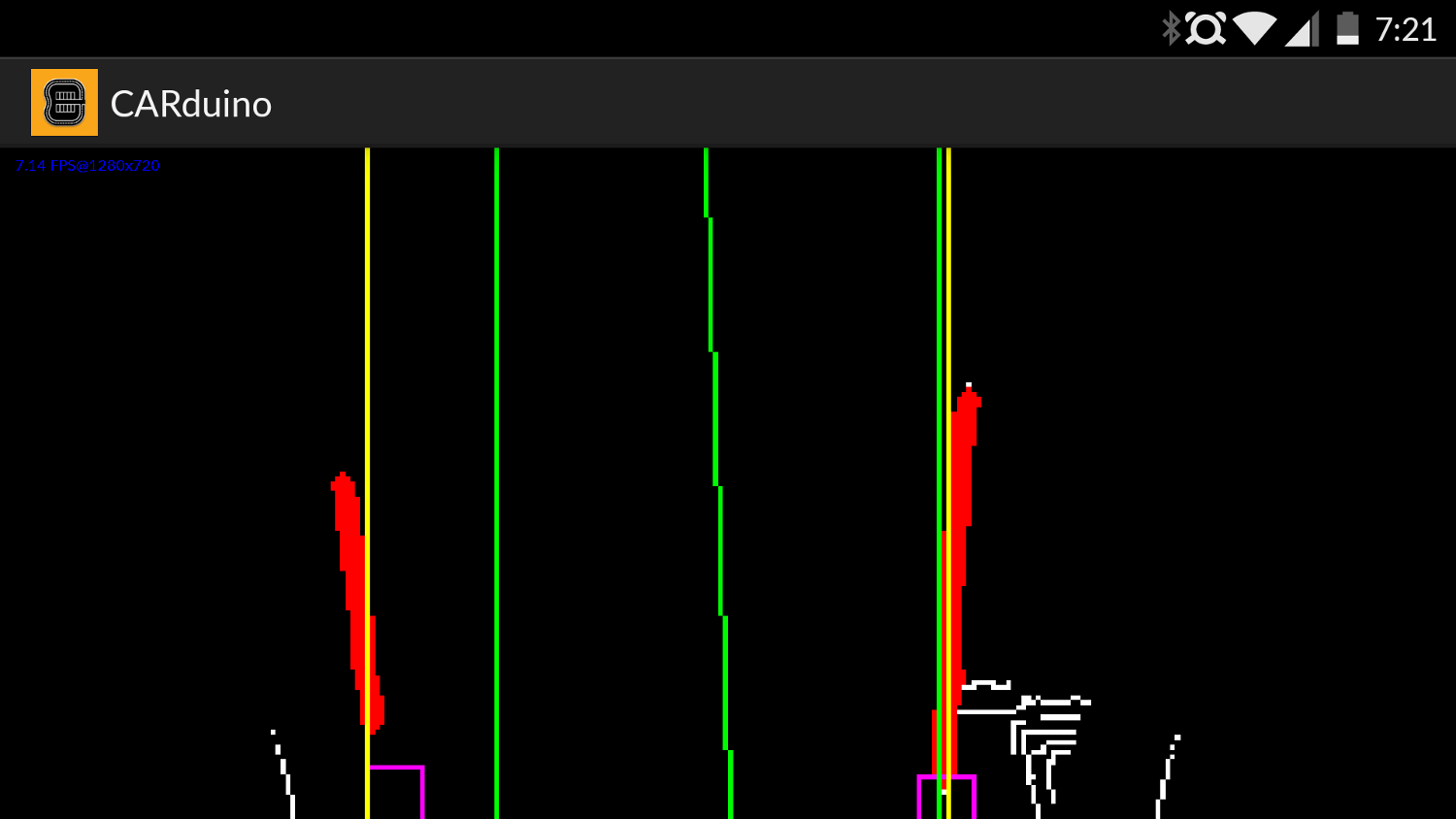

Finally, there was the image processing, which was written in C++, utilizing OpenCV, so to keep it consistent with the simulator. This enabled us to use sample recordings of the real environment (e.g. by mounting the camera on the vehicle and manually driving it along the test track) in order to test the various machine vision algorithms from the comfort of our laptops. Image processing was a really interesting field, which I sadly did not have the chance to work on at all. However, I was monitoring closely the ones responsible for it and I am glad to say that I picked up a thing or two. Another feature of the application, was that it could visualize the various transformations happening on the phone’s screen. This helped us do some on-the-spot debugging but also looks very cool. From a technical perspective, I know that we were applying light normalization, canny edge detection, Hough line transform and finally perspective transform in order to get a bird-eye (vertical) view of the video stream, captured from the phone’s camera, in order to later decide how to steer the car. Additionally, there is a custom made “line builder” functionality, that utilizes trigonometry to iterate over the pixels using a probability estimate, so to reduce false positives and decide which pixels or vectors are part of a street lane. David Jensen did a wonderful job on this. The following screenshots are from lines sought and found on… my living room carpet. It looks cooler on the actual track. :-D

The Android application, which we named CARduino, was tested to be successfully working with Jelly Bean, KitKat and Lollipop on the following, 2014, phones: JiaYu G4S, XiaoMi Mi3, OnePlus One.

You can try our various features (check the various branches), in the OpenDaVinci testing environment, using the resources found in this repository. It includes an image of the OpenDaVinci environment, with our image processor and driving logic features (parking, overtaking, lane following) ready to be compiled and ran.

Features::Innovative hardware platform

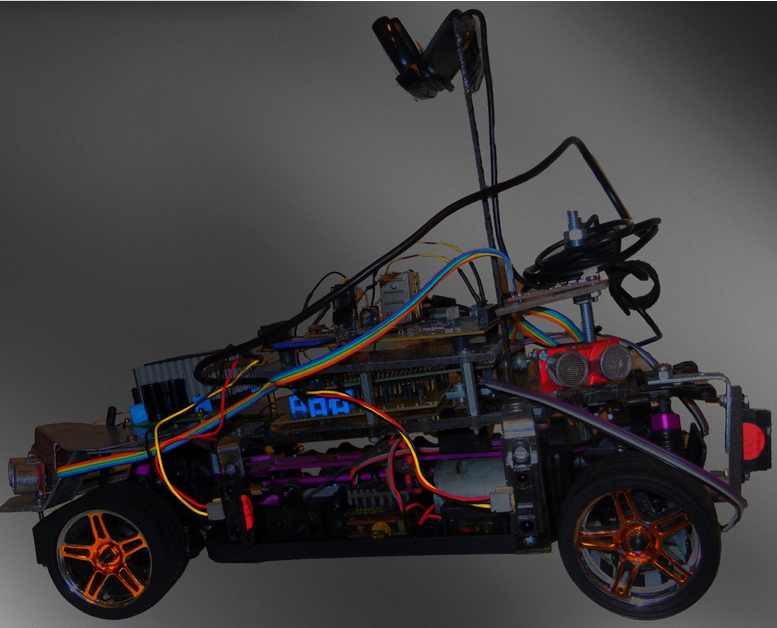

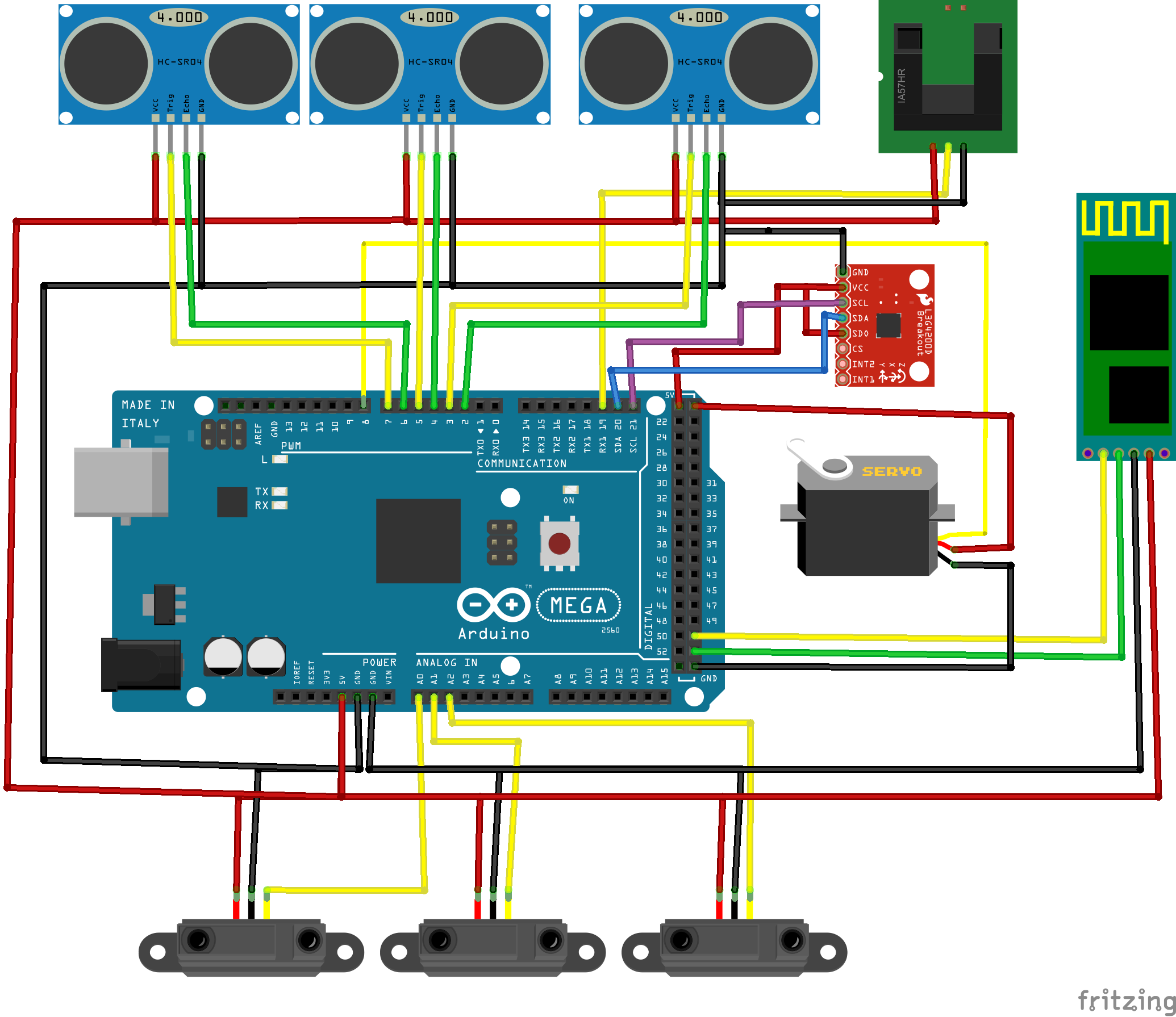

The physical platform, was based on a remote controlled 1/10th scale RC car, which we basically hacked, by initially replacing its ESC, DC and Servo motor. It is from the brand HSP Racing and you can find the model we got (or one almost like it) here. Next, we used a piece of black hard plastic, in order to create a compartment, on which we placed the microcontroller (Arduino Mega), a gyroscope and cable terminals among other components.

On one of the wheels, we fitted (with the aid of super glue) a speed encoder and on the front bumper a 9DOF IMU from Sparkfun, the Razor IMU. This board, includes an accelerometer, a gyroscope, a magnetometer as well as an Atmega328p, that runs sensor fusion firmware which in turn calculates the displacement from the north, in 3 axis and transmits it via the Serial port. Sadly, due to strong interference on the magnetometer (and possibly lack of firmware optimization) of the IMU, we could not take advantage of this really cool and expensive piece of technology. Ideally, if we could find a place sufficiently far away from the motors or cables, it would increase the movement accuracy of our vehicle.

Moreover, inside the red enclosure, that makes our vehicle look like a red fancy race car, we mounted three infrared and three ultrasonic sensors as well as LEDs lamps, that serve as flash and stop lights. In order to organize the cables, we have created a wire bus that connects the middle compartment (with the Arduino and the gyroscope) with the upper one (the enclosure). Moreover, to decrease the number of connections that have to be made from the Arduino, we built a LED driver board, based on the versatile ATtiny85, that receives signals over Serial and blinks the equivalent LEDs. Edit 15/10/2015: The LED driver is now a PCB!

Currently, there are also two infrared arrays in the front of the car, as well as an ADNS3080 based, optical flow sensor, but I will specifically write more about it when it works satisfactory enough. To be precise, I am waiting for an extra component to arrive from China and when it does, I hope I will be in the position to announce some very good news.

Features::Arduino library

On the software dimension of the physical layer, an Arduino library was created (based on a previous work of mine [1], [2]) which encapsulated the usage of the various sensors and permits us to handle them in an object oriented manner. The API, sports a high abstraction level, targeting primarily novice users who “just want to get the job done”. The components exposed, should however also be enough for more intricate user goals. The library is not yet 100% ready to be deployed out of the box in different hardware platforms, as it was built for an in house system after all, however with minor modifications that should not be a difficult task. This library was developed to be used with the following components in mind: an ESC, a servo motor for steering, HC-SR04 ultrasonic distance sensors, SHARP GP2D120 infrared distance sensors, an L3G4200D gyroscope, a speed encoder, a Razor IMU. Finally, you can find the sketch running on the actual vehicle here. Keep in mind that all decision making is done in the mobile device, therefore the microcontroller’s responsibility is just to fetch commands, encoded as Netstrings and execute them, while fetching sensor data and transmitting them.

Features::Lane following

Lane following is the primary feature of our autonomous vehicle and utilizes image processing conducted through the OpenCV library, in order to make the car capable of driving within the appropriate street lane. I will not pretend I am aware of all the technical details, but from a high level perspective, we utilized OpenCV to define what is a valid street lane and as long as we are able to find them, we make sure we stay in the middle of the lane. We tried to enhance this with a PID controller, however we faced some difficulties mainly due to time constraints, so we never managed to fully implement and integrate this feature. The lane following feature of our vehicle, which is also the basis for overtaking, has currently a very high success rate at the race track we are using for testing. In a few months, we will be able to try it on a different track and we are hoping for similar results.

Finally, check out a video of lane following, thanks to David Jensen and his work. The car kept driving seamlessly around the track, until we decided to stop it. Looks neat, doesn’t it? :)

Features::Overtaking

Overtaking obstacles that happen to be in the car’s path, is the one of the most exciting and challenging features of the vehicle, since it combines machine vision, with interpreting the inherently imperfect real world data from the sensors. While this feature worked perfectly fine in the OpenDaVinci simulations, with the vehicle successfully being able to overtake many different scenarios, it was very interesting to see how dramatically things differed in reality! A lot of struggle, effort and sleepless nights were invested by Ibtissam Karouach and Simeon Ivanov, however the final result was very rewarding. Particularly, the two main hurdles were for the vehicle to be constantly “aware” of its current relative position compared to the obstacle and the fact that due to the small size of our test track, after overtaking an obstacle we found ourselves on a curve, therefore there was no time to realign with the appropriate street lane. We found a satisfactory work-around for the first problem, with the addition of an infrared array, below the car. This enabled us to know when we are switching lanes, with the infrared sensors sending a signal when they happen to detect the middle dashed line. Of course this is only a temporary solution, adopted due to pressing time constraints and a more “proper” one - based on image processing, both for detecting the presence of an obstacle and recognizing when exactly we switched lane - is being currently devised. Check out a video of our Android autonomous vehicle avoiding obstacles in the video below.

Features::Parking

Parking was another fun feature that we managed to include in our vehicle’s core functionality. It was challenging due to the fact, that we were completely reliant on sensor data, more particularly that of the gyroscope, the speed encoder, the infrared and the ultrasonic distance sensors, to determine the surroundings and park the car. Surprisingly, the amount of noise we would get from the measurements, was not a concern, however we were challenged by the inability of the distance sensors to function properly when facing an obstacle at steep angles. This happens because the ultrasonic wave or the infrared beam, bounces away and never returns to be collected. Thus even if we are close to an obstacle, if the sensor is facing it from a high angle, it is not detected. Unfortunately, this is an inconsistency between the simulator (where everything functions ideally) and the real life behavior of the sensors, therefore we were forced to change the sensor locations and try many things on the real environment, until we would identify the ideal parameters for our algorithms to work. We are planning to improve this feature and make it more versatile, by integrating a mouse sensor, so to increase the car’s awareness of its position, as well as researching ways to enhance the procedure with machine vision. Check out a parking video, by Aurelien Hontabat.

Retrospective

This project, was overall very interesting and involved many of the typical challenges faced in physical computing, self driving vehicles and the automotive industry. Another useful experience that I personally acquired, was how to manage and motivate a relatively large software development team, with different technical backgrounds, priorities and availability. Sailing uncharted waters, attempting something that has never been done before and having to be continuously innovative in order to resolve issues, did not make things easier. Although, judging by the final result, I believe a good job was done. It was also exciting to see our efforts recognized, outside the academic community. Particularly, we were invited to present our vehicle at HiQ, which is a large IT consulting company. Click on the link, to check out more about our presentation at HiQ. Furthermore, we also had the honor to demonstrate some features of our vehicle during the Chalmers University president’s, Karin Markides, inauguration ceremony, organized by SAFER.

I should also express our gratitude, for our professor Christian Berger, who offered us the chance to follow a fundamentally different approach to his course and to innovate and productively express our creativity. I know from experience, this is not always the case in academia. Moreover, if you are interested on building autonomous vehicles and a more complete, platform independent and polished solution, do not forget to check the OpenDaVinci platform and its documentation!

To conclude, it is my firm belief, there is a lot of potential for future research and development on the subject and I hope that the work done thus far can be the starting point of something bigger, that will change the way we view transport.

EDIT: Our vehicle was featured in major maker websites, such as Makezine, Atmel Corporation, the Arduino blog, Huffington Post [FR], Popular Mechanics and Omicrono among others!